Publish your Data to the QDR

To make your data reusable in the European infrastructure you must let the European Commission know that you want to publish a dataset. This is done in the Qualification Dataset Register (QDR).

The QDR offers different ways to provide the files containing the datasets so that any infrastructure approach you may have is supported.

The possibilities are:

- Hosted on your server - Automatic Update

With this method, you host the data on your server and have the data automatically retrieved by the QDR. You specify a URL where the data will be continuously maintained and where the QDR can regularly check for updates.

- Hosted on your server - API update

With this method, you host the data on your server and notify the QDR using an API call any time there is an update. You would be given an API key by the QDR which authenticates your calls.

- Hosted on your server - Manual Update

With this method, you host the data on your server and manually notify the QDR using the web platform user interface any time there is an update. You need to provide a unique URL of the hosted dataset every time you publish new data.

- Manual upload

With this method, you host the data only on the QDR server and upload it manually using the web platform user interface. You would need to upload a unique file every time you publish new data.

The first two options above are preferable over the last two, as they will ensure that information published through Europass is updated information.

An automatic update of data hosted on national servers is the recommended option. It is therefore strongly recommended to use one of the first two options.

As a publisher, it is recommended to maintain one dataset for Accreditations, another one for Qualifications, and another for Learning Opportunities, but you can decide to publish more than one dataset for each data type if necessary.

When you publish data, it is important to keep your data stable by:

- Keeping your data accessible;

- Keeping your identifiers persistent and unique;

- Keeping your data up to date;

- Keeping your data versioned.

In this section, you can find the details on how to prepare and publish your data on Europass.

When deciding which information to publish, there is one important rule to follow:

Unless you have the responsibility to do so – per your national laws or internal codes of conducts – publish only data which you are the owner of.

You are the ‘owner’ when you define, describe, revoke and manage given data. If you nevertheless publish data that you do not own, it may lead to unnecessary data redundancy. The QDR outlines specific publishing agreement that must be signed before publication.

When publishing to the QDR, your datasets will have to be in ELM format, using either the Learning Opportunities and Qualification (LOQ) application profile or the Accreditation application profile. You can access the schemata documentation here.

- LOQ Application Profile: Providing information about Learning Opportunities and Qualifications. The ELM allows for the record of information in a unified way. Information about learning opportunities and qualifications, including the description of qualification standards, can be used for course catalogues, training announcements and learning opportunity databases, allowing universally comprehensible data to be easily exported and described in the same way across borders.

- Accreditation Application Profile: Publishing information on licensing and accreditation of educational institutions and/or their programmes, as well as issuing accreditation credentials to licenced or accredited organisations.

To learn more about the ELM, please visit the ELM in the QDR page. You can also visit the ELM datamodel browser here.

The first real step towards the publication is to assign an identifier to all the concepts. To do so, you may have the following questions:

An identifier allows to identify in a unique way the concept you publish information about. This is necessary to make sure you can link the information to other information on the web, without having the risk of losing track where the information comes from. On the web, different sources can publish information about the same concept. But how do we know they are talking about the same concepts? By looking at the identifier. If someone else wants to refer to your concept, then they will use the identifier to do so.

Every ‘concept’ needs to get an identifier. A concept is an ‘entity’ you want to publish information about: a learning opportunity, an organisation, a country, an awarding body, …

Some of these concepts will already have an identifier. To know which concepts have an identifier and which do not, you must check the ELM schema. Every “instance” of a class needs an identifier.

A university provides foreign languages courses as Learning Opportunities:

- Icelandic as a second language;

- Dutch for foreigners

These two different learning opportunities will each get a different identifier.

A secondary vocational education institution provides professional courses as learning opportunities:

- professional course of kitchen/pastry technician

- professional course of restaurant/bar technician

These two different learning opportunities will each get a different identifier.

A university awards different qualifications in the engineering department:

- Bachelor of industrial sciences;

- Master of industrial sciences: Chemical engineering;

- Master of industrial sciences: Electronic engineering.

A Vocational Education and Training (VET) provider awards different VET qualifications in the field of ICT:

- Qualification of ICT worker;

- Qualification of ICT service worker;

- Qualification of ICT service desk manager.

In both cases, these three different qualifications will each get a different identifier.

The identifier must be globally unique. This means that there is no other identifier in the world that is the same.

The identifier must be persistent: it should not change when the concept itself changes (for example when it changes location, or when a Learning Opportunity changes its name). Once you assign an identifier, it should always refer to the same concept (whenever, even if the concept does not exist anymore). This is needed because other systems might still use the identifier to refer to the concept.

In the best case, the identifier is dereferenceable: this means that anyone who uses the identifier, can access the concept itself. However, this is not a requirement.

You can design identifiers however you like if they meet the requirements of being unique and persistent.

If your organisation uses a system to assign codes to concepts, such as a national code for each qualification, then you can start from these codes to build identifiers. If you, however, prefer to use the code as an identifier itself, you must foresee that the identifiers are persistent and globally unique.

The best practice is to use URIs (Uniform Resource Identifiers) as identifiers. Uniqueness and persistence are guaranteed by the strategy of URIs. But it is possible to use identifiers other than URIs. In that case, you might have to foresee a way to build in uniqueness and persistence yourself.

Both options are explained with examples below.

An example of a URI structure would be the following:

| http://data.domain.eu/collection/type/key | ||

| domain (required) | Start from a domain name that your organisation owns. | University ABC has its website at http://www.universityABC.nl. They register http://data.universityABC.nl as a domain name for building identifiers. Remark: Adding “data” shows that this is to publish data, not documents. It is not required; however, you might have to register this as a new domain name. |

| collection (recommended) | A collection subdivides the domain into collections of entities | http://data.universityABC.nl/courses |

| type (recommended) | The type defines what kind of entity is described | http://data.universityABC.nl/ courses/learningopportunity or http://data.universityABC.nl/courses/qualifications |

| key (required) | The key makes the entity unique | http://data.universityABC.nl/ courses/learningopportunity/001 or http://data.universityABC.nl/courses/qualifications/Q1234 |

The strategy of URIs implicitly ensures that the URI is unique. Since you are the only owner of the domain, it is up to you to keep the identifiers unique within your domain. It is your responsibility to manage the keys within your domain and keep them unique.

If your organisation already uses a system to assign codes to the concepts you want to identify, you can use these codes as identifiers. In that case, you should foresee a mechanism to make the codes persistent and unique. QDR handles making these identifiers unique by combining them with a namespace that you define during the publication.

Best practice is to use these codes to build URIs. URIs ensure the uniqueness and persistence are guaranteed. See Use URIs as identifiers above.

Learning Opportunities Example: In the national database, learning opportunities get a national ID code when they are officially recognised by the government. Everyone can consult these codes in the register. These codes are unique and persistent because they are managed on a national level. You could use these codes – without modification – as identifiers for the learning opportunity you want to publish information about. During publication in QDR these codes are amended with a namespace which makes them globally unique.

Qualifications Example: In the Netherlands, qualifications get a CROHO code when they are officially recognised by the government. When you study arts & crafts e.g., the qualification you obtain is “Associate Degree Arts & Crafts” with code 80078. Everyone can consult these codes in the online CROHO register. These codes are unique and persistent because they are managed on a national level. You could use these codes – without modification – as identifiers for the qualifications you want to publish information about. During publication in QDR these codes are amended with a namespace (e.g. https://apps.duo.nl/MCROHO), which makes them globally unique.

In cases where you have your own codes, make sure you keep them unique and persistent at all times.

This section provides a model that you can use to model the information that you want to publish.

The ELM is an RDF vocabulary with an RDF ontology and set of application profiles. Additionally, there are XML schemata available to support the encoding of information in XML. The schemata also define controlled vocabularies as fixed value lists for some properties in the schema.

ELM is applicable in many contexts. They can be applied to encode, publish and exchange qualification metadata in many technologies, including:

You can find the schemata documentation here.

Investigate how the metadata schema applies to your data

Look at the classes in the ELM LOQ or Accreditation application profile (depending on the data you are publishing) and compare with the classes of your own information model. Do they correspond to each other? Try to find out how the entities in your model correspond to the classes of the schema.

How are the entities in your own information model related to one another? Look at the properties in the metadata schemata to see if you can use them to express the relationships between the entities.

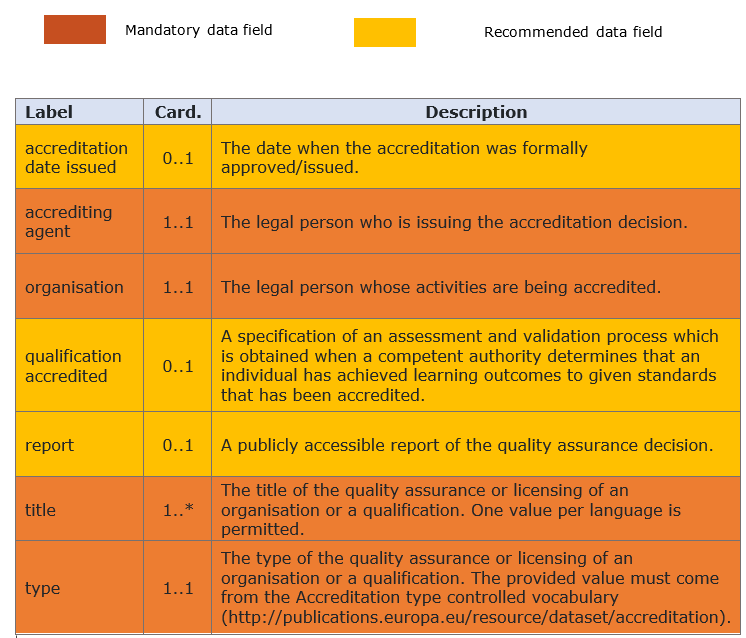

The ELM consists of classes and properties, divided into three kinds:

- Required data fields: fields that you ''must'' publish in any case;

- Recommended fields: fields that you should publish in case they are available;

- Optional data fields: fields that you can choose to publish, to give more information on the qualification or learning opportunity.

The more recommended and optional data fields used, the richer the data becomes. For Europass users it is relevant to find relevant and up to date information about a qualification

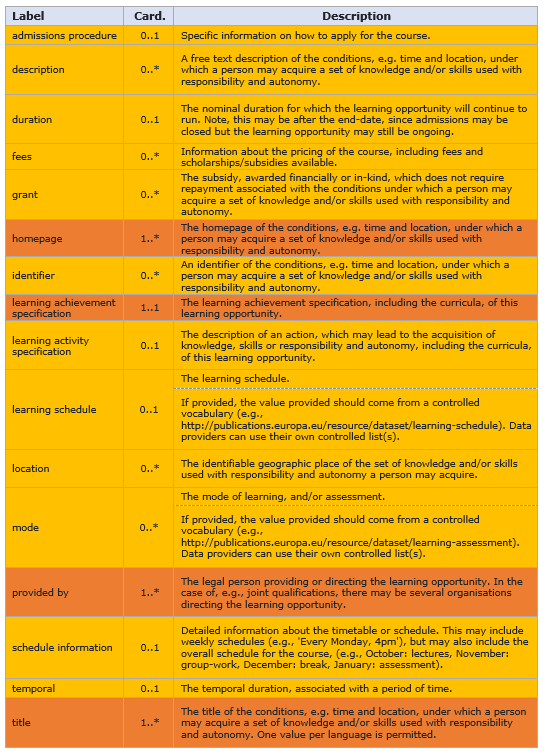

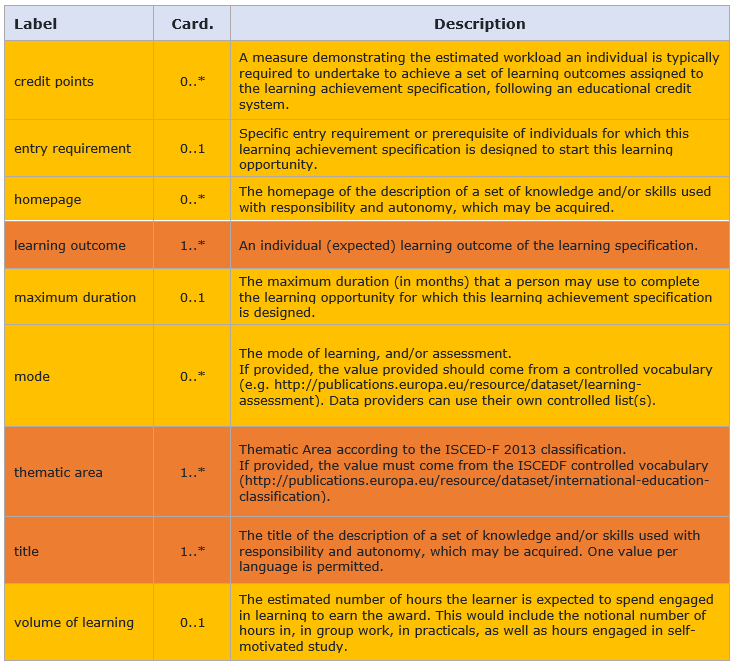

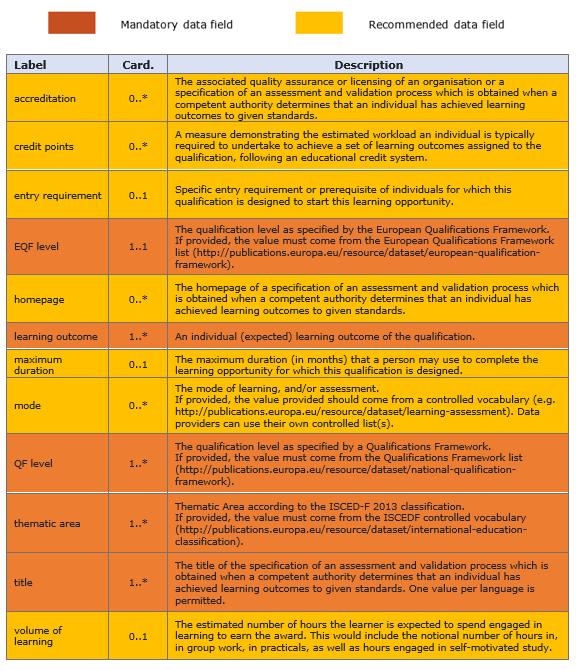

The tables below provides only the required data fields for each type of data (Learning Opportunities, Qualifications and Accreditations) with the information on:

- Label: referring to the property name (first column) ;

- Cardinality: indication of possible occurrences of given element (0.. meaning optional, 1.. compulsory and ..1 meaning maximum 1 value while ..* meaning potentially multiple values);

- Description: contains the definition of the property.

The learning opportunities required and recommended data fields are provided i below. For all the data fields, please refer to the QDR Document Library.

A learning opportunity is any course, education or training available to an individual, taking the form of onsite, online or blended learning, in a formal or non-formal context. The opportunity is provided at a given time usually with the aim to obtain a certain learning award, such as a qualification. A learning opportunity can, but does not necessarily have to, be linked to a qualification.

Example: I attend a two-year VET course which leads to a qualification of dental assistant. In parallel, I attend a five-hour PPT crash course organised by the national employment service, which leads to a certificate of attendance. Both are learning opportunities.

Learning Achievement Specification

A description of a set of knowledge and/or skills used with responsibility and autonomy, which may be acquired. For further information about specifications, please refer to the introduction to the ELM.

The Qualification required and recommended data fields are provided in the table below. The list of the elements for data fields for the electronic publication of information on qualifications with an EQF level is gathered in the Annex VI of the EQF Council Recommendation previously mentioned.

A specification of an assessment and validation process which is obtained when a competent authority determines that an individual has achieved learning outcomes to given standards. A Qualification is a subclass of the Learning Achievement Specification.

Since 2004, learning outcomes as a principle have been systemically promoted in the EU policy agenda for education, training and employment. The 2017 EQF Recommendation defines learning outcomes as ‘…statements of what an individual should know, understand and/or be able to do at the end of a learning process, which are defined in terms of knowledge, skills and responsibility and autonomy.’ This same definition has been integrated within the European Learning Model.

In line with this approach, learning outcomes are a mandatory field when publishing information on qualifications and learning opportunities in accordance with the European Learning Model, helping to bind together the different European tools developed in the last decade.

In June 2024 Cedefop and the European commission published the European guidelines for the development and writing of short, learning-outcomes-based descriptions of qualifications developed in the EQF-Europass project group (from here on the guidelines). The publication offers recommendations on the formal aspects (length, format) and content (scope, complexity, context) of these descriptions. It also includes practical resources, such as lists of action verbs and qualifiers, to help describe clearly the learning outcomes of qualifications.

We invite any entity that is publishing or considering to publish data to the QDR to familiarise themselves with the guidelines to learn to draft and structure learning outcomes that are published via the QDR.

The provision of learning outcomes data is crucial for the quality of data provided, helping to improve the understanding and portability of qualifications. Short, specific learning outcomes can help individuals showcase the value of their qualifications in their job application or requests for recognition.

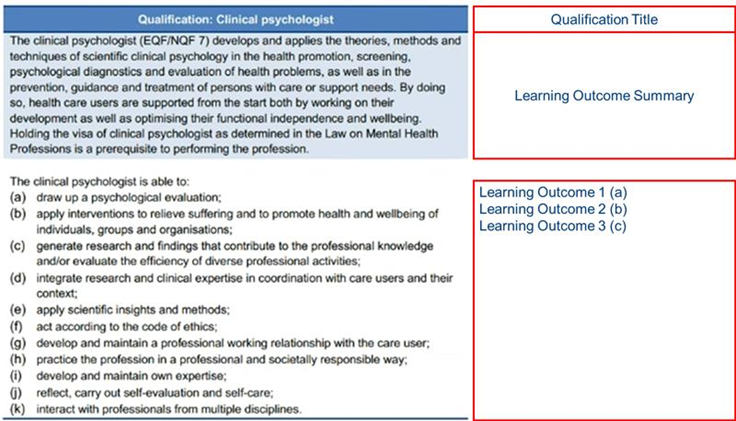

When publishing to the QDR, the use of either the ‘learning outcome’ and/or the ‘learning outcome summary’ field is mandatory. To publish your learning outcomes in accordance with guidelines, you will need to use both, where:

- The narrative presenting the overall objectives and orientation of the qualification corresponds to the ‘Learning Outcome Summary’ field (elm:learningOutcomeSummary) in ELM.

- Each individual bullet point expressing learning outcomes is referenced using the ‘Learning Outcome’ field (elm:LearningOutcome)

Illustration of the ELM fields corresponding to the short descriptions of Learning Outcomes

By using these fields, the short descriptions of learning outcomes will be correctly visualised in Europass.

Data publishers can also choose to supply additional information for each individual learning outcome (corresponding to the bullet points in the guidelines), as well as related ESCO skills. This allows for the provision of richer data and aid the course and qualification recommender system in Europass.

For further information on how to structure the data, we invite you to refer to the LOQ schemata files available here.

The Accreditation required and recommended data fields are provided in the table below. For all the data fields, please refer to the Schema files published here.

In some cases, you may be able to fit such information into “AdditionalNote”, if this is not possible it is better to omit to publish it. In case you believe the information is important and not represented in the schemata, you can suggest additional data fields for future improvement.

This is not a problem. It is allowed to use only a subset of the classes (and properties) of the LOQ schema. While every class has mandatory properties in the LOQ Application Profile, you do not need to inform all classes in your learning opportunity / qualification data. Every Learning Opportunity must be specified by either a Learning Achievement Specification or a Qualification (that is a special kind of Learning Achievement Specification); if your learning opportunity is not specified by a Qualification, you do not need to supply QF values even though the EQF and NQF levels are mandatory properties of the Qualification class.

The European Commission is aware that additional mandatory fields can restrict countries from publishing and this delays the publication process. However, additional required fields also enrich the data and work as an incentive to gather the data from the data providers at national level.

If the data for a mandatory field is not yet available, the European Commission is open to discuss together with the data-provider the possibilities for a temporary workaround. The goal is to avoid that countries cannot publish because they are unable to provide the mandatory fields data. This should be under the condition that the workaround is temporary, and work will be done to commit to the mandatory fields to best serve the Europass end-users.

The language is apparent in some of the properties of a concept, for example, the title of qualification / learning opportunity or its homepage.

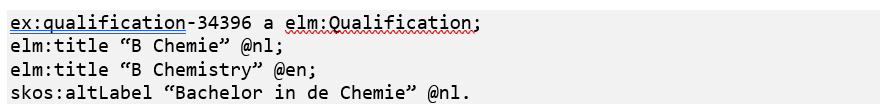

Example 1 (qualifications): In the Netherlands, the official title of a bachelor’s in chemistry is ‘B Chemie’ (CROHO code 34396). In English, the official title is ‘B Chemistry’. But an awarding body can refer to it as ‘Bachelor in de Chemie', which is an alternative Dutch title. This would be modelled as such:

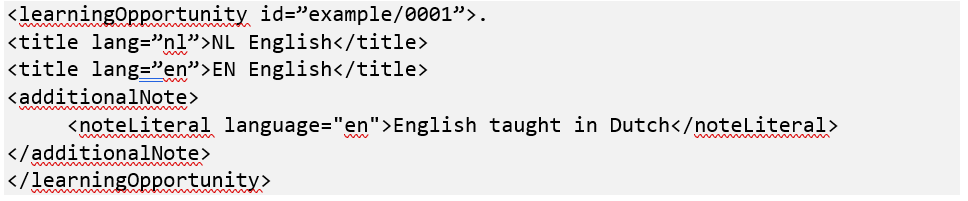

Example 2 (learning opportunities): In the Netherlands, the official title of an opportunity is ‘NL English’. In English, the official title is ‘EN English’. But an awarding body can refer to it as ‘English taught in Dutch’, which can server as alternative English additional note. This would be modelled as such:

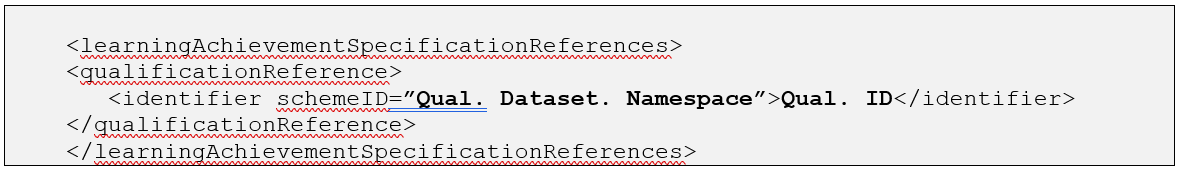

Qualifications and learning opportunities may be linked together to strengthen the network of the data and lower redundancy of information. Such links state that a given learning opportunity is specified by a qualification – i.e. the opportunity leads to awarding of given qualification.

In order to establish such a relation using QDR, your organisation should implement the following steps:

- Publish a qualification dataset: The qualification dataset must always be created first – the learning opportunity dataset afterwards refers to the existing qualifications it contains;

- Note the qualification reference: The learning opportunities must refer to the qualification data with their ID and the namespace of the dataset that the qualification is located in;

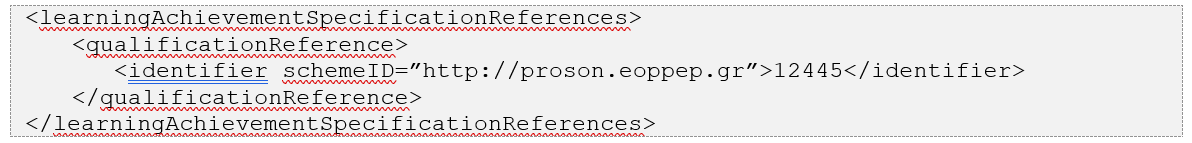

- Publish the reference XML: The XML should indicate the reference to a qualification in the following way:

- Qual. Dataset. Namespace: Namespace of the dataset where the reference qualification is located

- Qual. ID: ID of the qualification that is being referred to

Example: Example of such a structure would be:

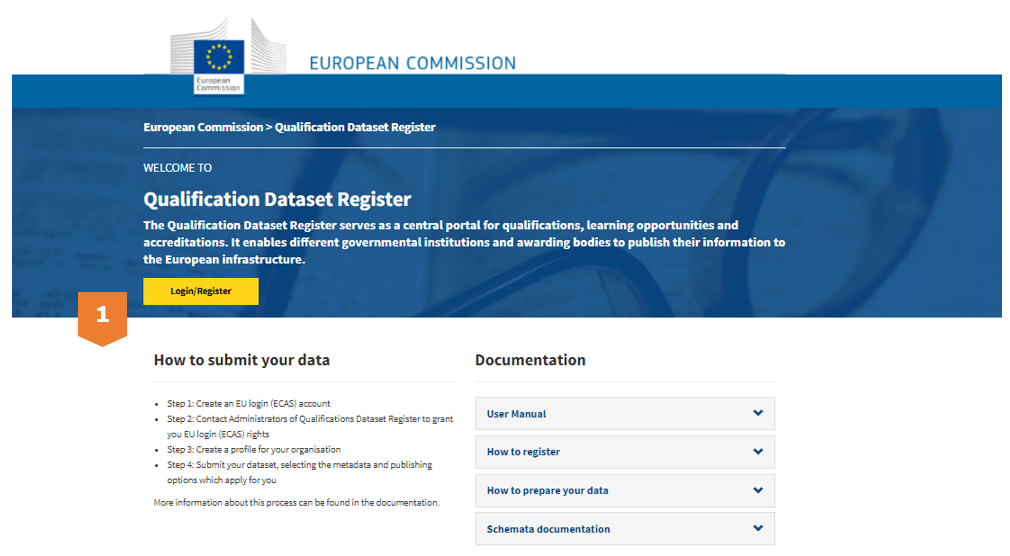

This section provides information on how to publish datasets on the QDR and sets preferences for uploading and updating your data.

You can log in to the QDR platform using your EU Login account.

If you have not yet registered on the platform and created a profile for your organisation, please check the Register for the QDR pages, follow the described steps, and then return to this step to find out how to publish your data.

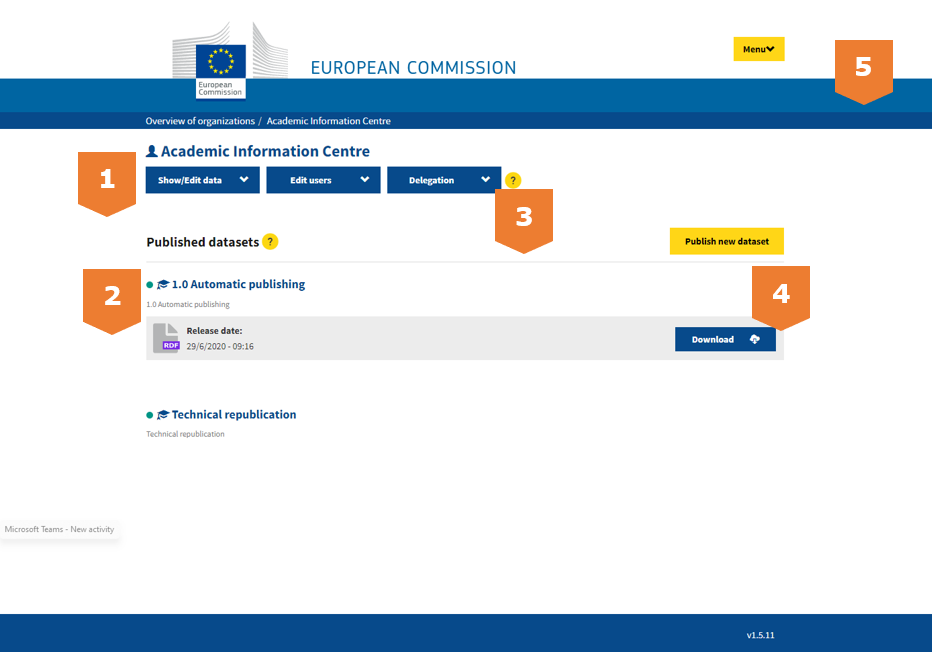

The page of the organisation’s profile page is shown in the figure below. You can find several navigation elements:

- [1] ‘Show/Edit data’ – Allowing you to modify information about your organisation.

- [2] ‘Published datasets’ – Here, you can find the datasets you have published and have the possibility to modify them.

- [3] ‘Delegation’ – Allowing you to delegate the publication rights of your organisation to another organisation.

- [4] ‘Publish new dataset’ – Adding a new dataset with qualification, learning opportunity or accreditation data

- [5] ‘Logout’ – To leave the application

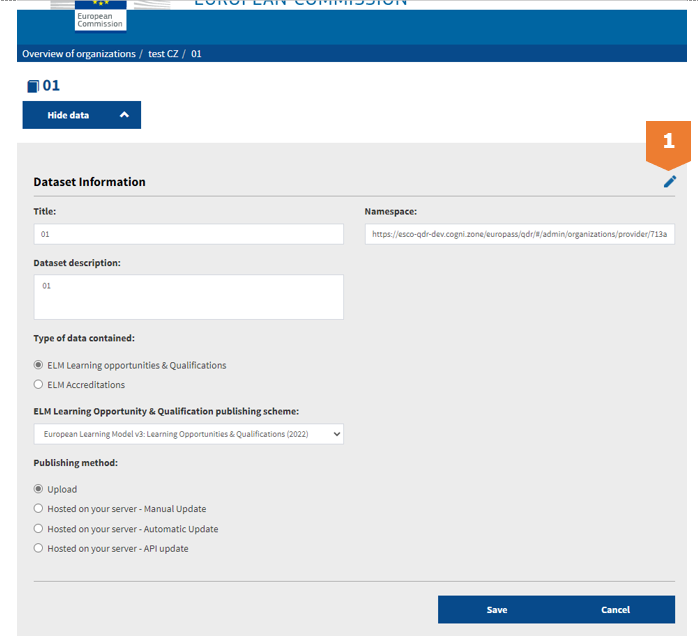

On the right side of the landing page, click on the yellow ‘Publish new dataset’ button to upload a new dataset. This will take you to a pop-up page where you can fill out the information of this dataset, as well as decide how you want your data to be updated.

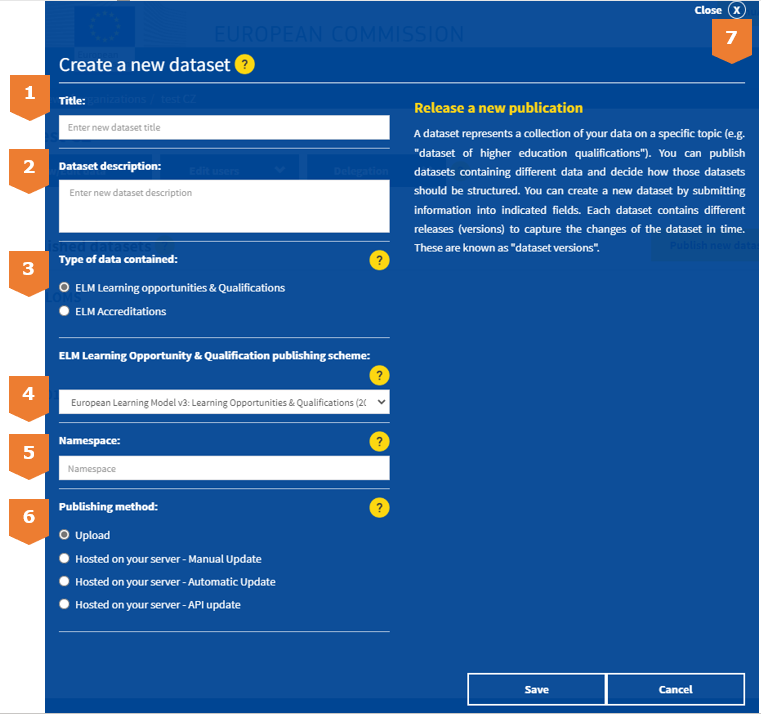

The following information should be indicated during the publication:

- First, fill out the [1] ‘Title’ of your data, then add a brief [2] ‘Dataset description’.

- Then you should indicate what type of data will be published in this dataset in [3] ‘Type of data contained’. The data contained in your dataset should be:

- If publishing Learning Opportunities or Qualifications: ELM Learning opportunities & Qualifications – Among other, containing qualifications, which represent the formal outcome of an assessment and validation process which is obtained when a competent body determines that an individual has achieved learning outcomes to given standards. Contained qualifications may also contain information on accreditation, licencing or authorisation, if relevant.

- If Publishing Accreditations: ELM Accreditations

- In the field ‘Publishing scheme’ [4], select the right option from a top-down menu to indicate which schema is your data published in:

- If you have previously published Qualifications using the QMS2 and plan to continue using this schema, please select “Qualification V2 metadata schema (2020)”;

- If you have previously published Learning Opportunities using the LOMS scheme, and plan to continue, please select "Learning Opportunities metadata schema (2019)"

- If you plan to publish Qualifications or Learning Opportunities in the new ELM schema published in May 2023, please select “European Learning Model v3: Learning Opportunities & Qualifications (2022)”

- For Accreditation data the only available schema is “European Learning Model v3: Accreditations (2022)”

- [5] ‘Namespace’ The namespace is used in order to transform your local identifiers into globally unique ones. Please specify here a globally unique string which will be used to represent a namespace of contained qualifications or learning opportunities. The namespace has to be formed in a URL structure (e.g. "http://example.com/"). This can be represented for example by the URL of your organisation or the base URI of your concepts.

- For the [6] ‘Publishing method’, there are two main distinctions: Hosted or Upload. This defines whether you wish to upload your data as a manual upload in a file to QDR, or automatically to provide a URL from which the platform fetches your data, which is further explained in the following sections.

Hosted: you do not need to manually add a file in the platform. It is a method which requires to host a qualification dataset on a server and provide the URL pointing to the data during dataset creation on the QDR portal. QDR then downloads the qualification data from the provided URL whenever a new version is created. The data must be hosted through http or https and only port 80, 443.

There are three options available for the hosted options listed below:

- Hosted on your server - Automatic Update: the updates will happen automatically. You will then indicate a URL where you are planning to continuously maintain your dataset. Using this method, you should make sure that the HTML header of your indicated URL is updated every time you want to release a new dataset version. You are provided with the following setting with this option:

- In the field ‘Download URL’, a field will appear where you can paste the URL from which QDR will create the new versions as your website is updated.

- In the field ’Update Frequency’, you can control the frequency of the updates, namely: Monthly, Weekly and Daily.

- In the field ‘Type of provided file’, you can see which file types are supported, and you can indicate the option that applies to you.

- Hosted on your server - API update: you will be able to push each update of your dataset to QDR using an API call. If you plan to use this method, please contact the administrators of QDR to provide you with an API key.

- Hosted on your server – Manual Update: you will provide a new URL from which the platform can fetch the updated data every time a new version is released.

Upload: you will need to manually add a file in the platform to publish new datasets. This also means you will upload a new file every time your data is updated.

If you select the publication method ‘hosted - automated fetching’, you will not be able to create new versions manually; they will always be created automatically with a daily refresh.

If you select the publication method ‘hosted – manual update’, you will need to create a dataset version manually and provide a URL each time you want to release a new version.

You can change the publishing method of a specific dataset at any time in case you decide that you want to provide future versions using a different method.

Then, you can save everything by clicking ‘save’ at the bottom of the page.

- After you save, you will be redirected to the previous page.

- If at any point you wish to close the page, you can find the [7] ‘x’ button on the top right.

Once you have created a new dataset, you can now upload your data. For this you will need to create a version. First you have to access the dataset, by clicking on its name on your profile page. Depending on which publishing method you have selected for the dataset, the creation of a version may look slightly different. Below we explain ‘How to create a dataset version?’ for each of the dataset publishing methods.

During the initial publishing phase, if you chose ‘Hosted – Automated Fetching’, the version updates will be done for you and you will not have to update the version using any dialogue, so this option is no longer applicable.

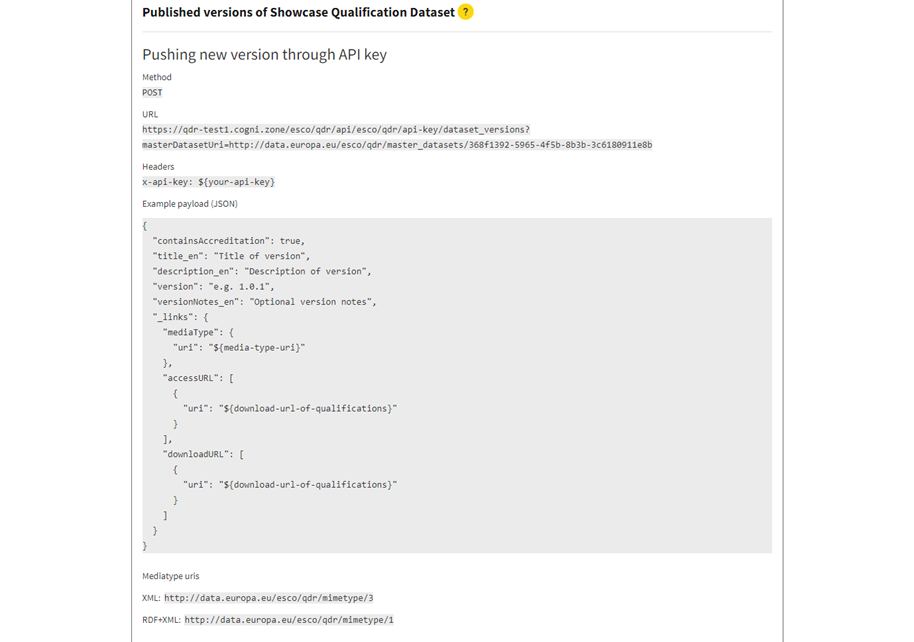

During the initial publishing phase, if you chose ‘Hosted on your server - API update’, the version updates will be done via an API and you will not have to update the version using any dialogue. In this case, the dataset page will show detailed information on how to perform an API call required to push the data to QDR:

If you plan to use this method, please contact the administrators of QDR to provide you with an API key.

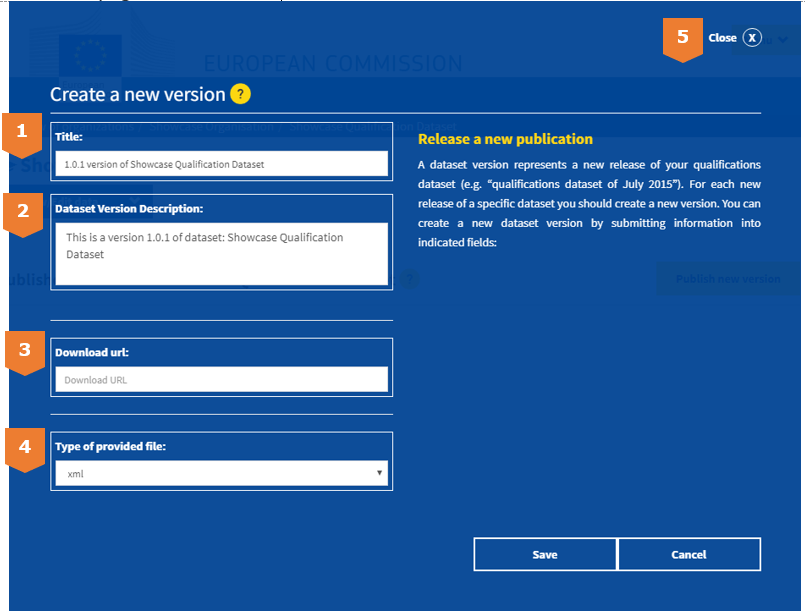

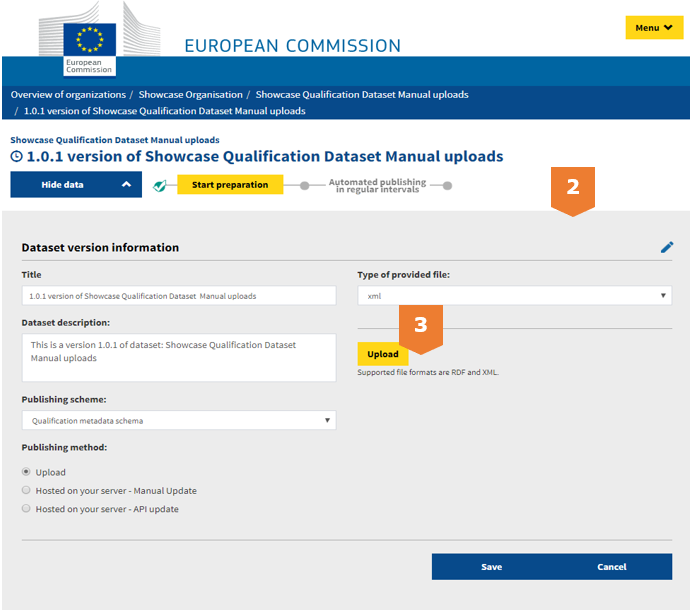

To create a new version with this publishing method simply click on the ‘Publish new version’ button. This will show a pop-up page similar to the page for creating new datasets. The page looks like this:

The following information should be indicated during the publication:

- First, fill out the [1] ‘Title’ of the version, then add a brief [2] ‘Dataset Version Description’.

- For [3] ‘Download URL’, you will be asked to indicate the URL from where QDR can retrieve the data.

- Finally, in [4] ‘Type of provided file’ you should indicate what is the type of file that you are providing.

- Then, you can save everything by clicking ‘save’ at the bottom of the page.

- After you save, you will be redirected to the previous page.

- If at any point you wish to close the page, you can find the [5] ‘x’ button on the top right.

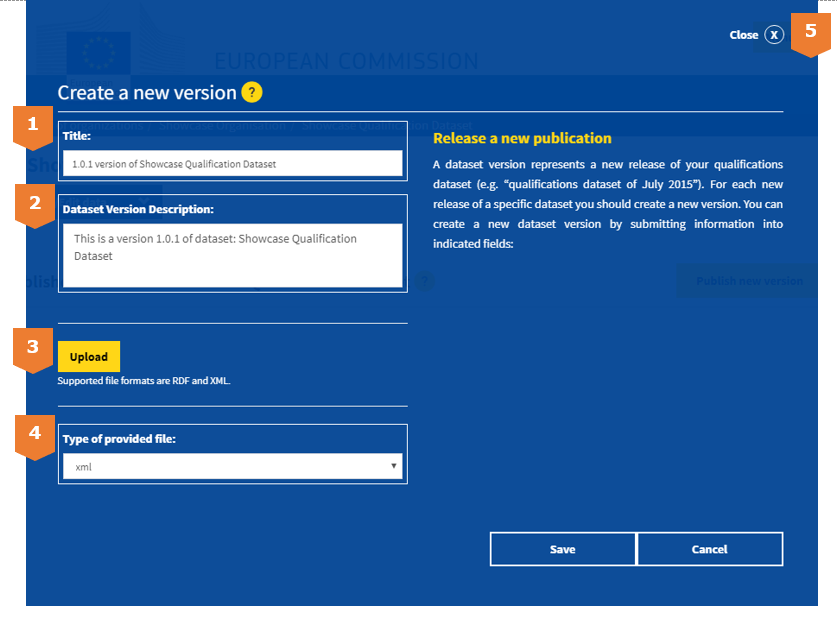

To create a new version with this publishing method simply click on the ‘Publish new version’ button. This will show a pop-up page similar to the page for creating new datasets. The page looks like this:

The following information should be indicated during the publication:

- First, fill out the [1] ‘Title’ of the version, then add a brief [2] ‘Dataset Version Description’.

- For [3] ‘Upload’, you will be asked to upload a file containing your data.

- Finally, in [4] ‘Type of provided file’ you should indicate what is the type of file that you are providing.

- Then, you can save everything by clicking ‘save’ at the bottom of the page.

- After you save, you will be redirected to the previous page.

- If at any point you wish to close the page, you can find the [5] ‘x’ button on the top right.

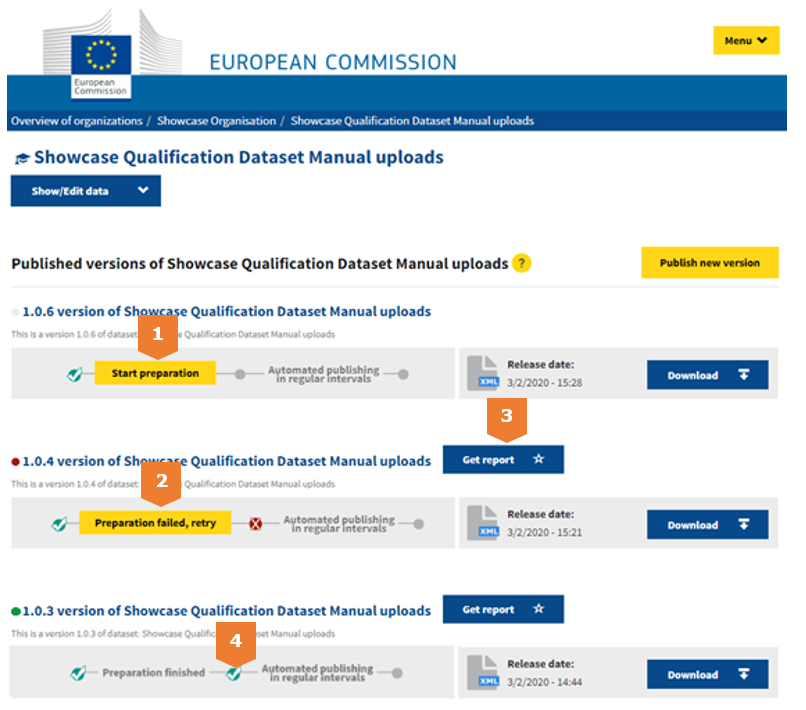

Once you have created a new version you will see it in the listing on the dataset overview. This page looks like this:

Once you have created a new version you will see it in the listing on the dataset overview. This page looks like this:

Here you are able to start the processing of the dataset by clicking [1] ‘Start preparation’. During the processing, the platform takes your data and transforms it into a unified RDF format according to the metadata schema. This process also applies validations of the compliance of the data. The content of your data is not modified by this process.

The processing may take some time and will result in either success or failure.

- Successful upload of a dataset: You will see green checkbox [4] for each successful processing step and will also receive a confirmation e-mail if the processing succeeded until the end.

- Failure to upload a dataset: If there was a problem, you will see this warning right underneath the version as [2] ‘Preparation failed, retry’.

You can then perform the necessary fixes noted in the report ([3] ‘Get report’) and retry processing. If everything went well, you would see this green confirmation instead ([4]).

If there are any problems with the information provided, you will receive an e-mail with a report, which will redirect you to the platform where you can see and resolve the problem.

This is only applicable to: ‘Upload’ and ‘Hosted on your server - Manual Update’. Other methods do not require manual initiation of the processing.

Here you are able to edit the metadata about a dataset by clicking on [1].

You can change all the Dataset Information: Title, Dataset description, Namespace, Type of data contained, Qualification publishing scheme, publishing method and what is included in your dataset.

To edit a dataset version for manual uploads, you can follow the following steps:

- Click on the dataset version you want to edit.

- In the dataset page, click on ‘Show/Edit data’. You will get a form containing the metadata of a dataset version, click on the edit pen to start changing the information [2].

- You can even upload a new dataset to this version by clicking on ‘upload’ [3]

It is recommended to always provide the most up-to-date data. The publishing method you choose will determine how easy it is to ensure the frequency and relevance of the updates:

- Hosted on your server – Automatic Update: The updates happen automatically. It is essential to ensure that the HTML header of your indicated URL is updated every time you want to release a new dataset version.

- Hosted on your server – API update: The latest version could be published using an API call.

- Hosted on your server – Manual Update: For every new version, you will need to add the file in the server.

- Upload: with this publishing option, you will have to manually update the dataset. To update, you will need to create a new dataset version as explained in section ‘Creating dataset versions: Upload’. The system always takes the last uploaded version of the dataset as the latest and correct version.

The automatic/API updates are more efficient and time-saving solutions for updating the datasets. It is highly recommended that these methods are used as publishing options.

Publication of information on qualifications and learning opportunities is crucial to ensure that Europass can work as a tool to support lifelong learning and career management (Article 3.2 Europass decision).

Working towards an increase in data quality is fundamental to having relevant, rich, accurate, complete, and trustworthy information on qualifications, learning opportunities and accreditations. This includes ensuring completeness of the data fields, the correct use of data fields from the European Learning Model and a correct structure of the data.

Improving data quality is primarily a responsibility of Member States, as owners and/or providers of the data, while the European Commission provides various support points (information provision, guidance and technical support).

The QDR should contain clear, rich, updated and reliable information on information on qualifications, learning opportunities and accreditations, coming from all countries involved in Europass and in the European Qualifications Framework (EQF). The following elements are crucial to ensure high data quality:

- Completeness; besides the mandatory data fields, the data should include as many recommended and optional fields indicated in the European Learning Model as possible;

- Consistency (structured data); the data should be structured to align with the European Learning Model. Structured data are better captured by Artificial Intelligence techniques which are behind the functioning of the “course recommender” system;

- Accuracy; the data should be reliable and exclude errors;

- Up to date; the data should be updated regularly. No ’outdated data’ should be present in the database such as wrong starting dates and expired web links.

Focus on high-quality data regarding the qualifications and learning opportunities in Europass is relevant for several reasons:

- The search function: when data is placed in the correct fields, complete and structured, the results coming from the search will be more accurate and the visualisation of learning opportunities and qualifications will be better. E.g. when a user searches for a particular“thematic field’’, and learning opportunities or qualifications of a country’s database are tagged with the wrong thematic field, results might not be accurate and useful for the end user, and therefore not well visualised;

- To provide the end-users and stakeholders with qualitative, relevant, and extensive information on the details of a learning opportunity or of a qualification;

- To suggest courses to end-users based on relevant and correct data (recommender system), using Artificial Intelligence techniques.

Poor data quality could result in less reliable data. This could lead to misunderstandings for the users, difficult localisation in the search and less satisfied end-users users in general.

Following an analysis performed on the data currently available in the QDR, the Commission identified four recurrent issues in the quality of the data, which should be addressed in order to ensure that data follow the above-mentioned quality standards:

- Inappropriate use of data fields: information provided is not relevant/correct in relation to the specific data field. Examples that have occurred are;

- wrong ISCED-F or language codes

- very generic data

- a single data field containing a mix of multiple items

- uninformative fields (e.g. "see description")

- fields containing mark-up language;

- Poor quality of data in terms of structure, completeness and/or precision. This was observed in particular in relation to the description of learning outcomes; unstructured learning outcomes descriptions e.g. by jumbling all learning outcomes into one textbox, or a lack of information on learning outcomes

- Duplication of information across several data fields or across different learning opportunities/qualifications. Examples that have occurred are the use of EQF level descriptors to fill in learning outcomes fields or identical descriptions of learning outcomes for different learning opportunities or qualifications)

- Many (non-mandatory) data fields left empty

- Dummy data provided for certain data fields

Poor data quality can lead to bad and even wrong results and suggestions and limit the full potential of Europass. On the short term, the Commission will provide guidelines on how to improve data quality (tips and tricks), and the Commission will provide a “golden data example’’, which is a course in the Europass Interface mapped with the fields in the data model.

In the longer term, the Commission aims to provide targeted personalised support (data quality reports), or explore the possibilities to check the quality of the data within the QDR via KPIs indicators.

The QDR support team is available to answer any of your concerns or questions. If needed, a bilateral support call can also be arranged with our technical support team. For any questions, do not hesitate to contact us at : EMPL-ELM-SUPPORT@ec.europa.eu

Facebook

Facebook

Twitter

Twitter

Linkedin

Linkedin